源碼分享

import json

import os.path

import time

import jsonpath

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/103.0.5060.114 Safari/537.36',

'referer': 'https://www.zhihu.com/question/26297181',

'cookie': ''

}

# 發(fā)送請求獲取json數(shù)據(jù)

def get_data(url):

# 發(fā)送請求獲取json數(shù)據(jù)

resp = requests.get(url=url, headers=headers)

content = resp.content

# 返回json數(shù)據(jù)

return content

# 解析json數(shù)據(jù)

def parse_data(content):

# 加載json數(shù)據(jù)

data = json.loads(content)

# 解析獲取回答數(shù)據(jù)

answer_list = jsonpath.jsonpath(data, '$..data')

# 解析獲取下一頁請求地址

answer_next = jsonpath.jsonpath(data, '$..paging..next')[0]

# 定義一個字典存放答主名稱和src列表

answer_dict = {}

# 遍歷獲取每個回答的內(nèi)容

for answer in answer_list[0]:

# 解析獲取答主名稱

answer_name = jsonpath.jsonpath(answer, '$..author..name')[0]

# 解析獲取回答內(nèi)容

answer_content = str(jsonpath.jsonpath(answer, '$..target..content'))

# 定義列表存放src路徑

src_list = []

# 遍歷獲取內(nèi)容中所有圖片

while True:

# 圖片src起始位置

start_index = answer_content.find('data-actualsrc=\"') + 16

# 圖片src結(jié)束位置

end_index = answer_content.find('?source=1940ef5c')

# 如果能查到結(jié)束位置標記則獲取src路徑避免返回值為-1時的無效查找

if end_index != -1:

# 真實圖片src路徑

src = answer_content[start_index:end_index]

# 如果src在列表中不存在且不包含_r則添加到列表中

if src not in src_list and '_r' not in src and len(src) < 100 and len(src) != 0:

src = src.replace('_720w', '')

src_list.append(src)

# 更新截取后的內(nèi)容

answer_content = answer_content[end_index + 17:-1]

# 如果查不到src結(jié)束索引則跳出循環(huán)

else:

break

answer_dict[answer_name] = src_list

# 將存放src的列表和下一頁api返回

return answer_dict, answer_next

def download_img(answer_dict, save_path):

# 遍歷字典獲取名稱\src列表

for name, src_list in answer_dict.items():

# 遍歷src列表獲取src

for src in src_list:

# 發(fā)送請求獲取圖片

img_resp = requests.get(src, headers=headers)

# 設(shè)置保存路徑

path = save_path + f'{name}'

# 判斷路徑是否存在,不存在就創(chuàng)建

if not os.path.exists(path):

os.makedirs(path)

# 保存圖片

with open(f'{path}/{int(time.time())}.jpg', 'wb') as img_f:

img_f.write(img_resp.content)

if __name__ == '__main__':

# 設(shè)置起始api

url = 'https://www.zhihu.com/api/v4/questions/26297181/feeds?cursor=e9d89ca6466891518544484e84026815&include=data%5B%2A%5D.is_normal%2Cadmin_closed_comment%2Creward_info%2Cis_collapsed%2Cannotation_action%2Cannotation_detail%2Ccollapse_reason%2Cis_sticky%2Ccollapsed_by%2Csuggest_edit%2Ccomment_count%2Ccan_comment%2Ccontent%2Ceditable_content%2Cattachment%2Cvoteup_count%2Creshipment_settings%2Ccomment_permission%2Ccreated_time%2Cupdated_time%2Creview_info%2Crelevant_info%2Cquestion%2Cexcerpt%2Cis_labeled%2Cpaid_info%2Cpaid_info_content%2Creaction_instruction%2Crelationship.is_authorized%2Cis_author%2Cvoting%2Cis_thanked%2Cis_nothelp%2Cis_recognized%3Bdata%5B%2A%5D.mark_infos%5B%2A%5D.url%3Bdata%5B%2A%5D.author.follower_count%2Cvip_info%2Cbadge%5B%2A%5D.topics%3Bdata%5B%2A%5D.settings.table_of_content.enabled&limit=5&offset=0&order=default&platform=desktop&session_id=1657444499711409954'

save_path = input('請輸入要保存的路徑,以/結(jié)尾:')

if save_path[-1] != '/':

save_path = input('沒有以/結(jié)尾,請重新輸入保存路徑:')

while True:

try:

# 調(diào)用方法獲取數(shù)據(jù)

content = get_data(url=url)

except Exception:

break

# 調(diào)用方法解析圖片地址

answer_dict, url = parse_data(content=content)

# 調(diào)用方法下載圖片

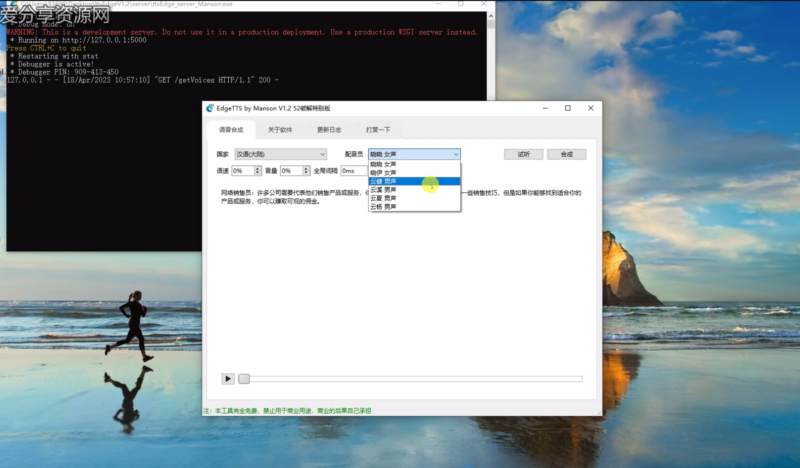

download_img(answer_dict, save_path)實現(xiàn)一個知乎下的簡單爬行圖,雖然簡單,但基本涵蓋了所有爬蟲的基礎(chǔ)知識,小白可以學(xué)習(xí),畢竟簡單爬圖還是沒有問題的。

![圖片[1]-爬取知乎問題下的各種圖片](http://m.oilmaxhydraulic.com.cn/wp-content/uploads/2022/11/8fba58f5e9140245.jpg)

相關(guān)圖片爬取文章:

? 版權(quán)聲明

THE END

會員專屬

會員專屬

暫無評論內(nèi)容